An interesting Tailscale + Docker gotcha

Published on

As I’ve written about before, I use Tailscale for a lot of things. I thought I had it set up in a reasonably secure manner, but I recently noticed a problem.

I use Tailscale’s ACLs to limit what each node can access, based on the tags I apply to it. So an app node can’t access anything via Tailscale, while an integration or server node can access things tagged with either app or integration. This is expressed pretty simply in the Tailscale ACL JSON:

{

"tagOwners": {

// Servers that can be SSH'd into

"tag:server": [],

// Applications that are exposed on tailscale but never connect out

"tag:app": [],

// Things which talk to other services over tailscale (connecting to apps etc)

"tag:integration": [],

},

"grants": [

{

// Users can access everything

"src": ["autogroup:member"],

"dst": ["*"],

"ip": ["*"],

},

{

// Servers and integrations can access integrations and apps

"src": ["tag:server", "tag:integration"],

"dst": ["tag:integration", "tag:app"],

"ip": ["*"],

},

],

}

Over the past week I’ve been setting up a private Forgejo instance behind Tailscale, complete with an actions runner that runs things using a docker-in-docker container. I didn’t want the runner knowing anything about Tailscale, so I had it configured to speak to Forgejo direct over HTTP (forgejo:3000) instead of using full Tailscale HTTPS URL that I use when accessing it (http://git.example-net.ts.net/)1.

Everything was going fine, until I forgot to do that translation… and it worked. My Forgejo action runners could access anything on my tailnet. I run several things on Tailscale that just have authentication turned off, on the basis that only authorised devices can access them. Things like the admin interface for this website. I definitely didn’t intend for any workflow I run on my git server to have access to edit my website!

This wasn’t just limited to Forgejo, either. Any docker container I was running could access the tailnet. In hindsight it’s fairly obvious why: the host is running Tailscale, connected as a node tagged with my server tag. That creates a tailscale0 interface, and automagically sets up iptables rules to route Tailscale traffic over the interface. Docker also automagically sets up iptables rules to bridge traffic, and apparently these two sets of rules interact in such a way that traffic from Docker containers is allowed to route via the tailscale0 interface.

I say it’s fairly obvious in hindsight — there’s no reason why Docker would special case any particular host interface after all — but it still feels pretty surprising. Because both bits of software inject their own iptables rules, I never really had a good mental model for how they interact. The host Tailscale node was a completely separate building block to Docker. It would be a pain to use either of them if they didn’t do these rules, but it’s also one of the reasons I don’t really like “magical” things2.

There are lots of ways to fix this, but none of them feel particularly great. You can configure both Tailscale and Docker to not automatically fiddle with iptables and handle the rules yourself, but I really hate dealing with iptables3. As a stopgap I did hold my nose and add some iptables rules to drop traffic to the tailscale0 interface if it originated from the IP ranges that Docker was configured to use:

iptables -I DOCKER-USER -s 192.168.0.0/16 -o tailscale0 -j DROP

iptables -I DOCKER-USER -s 172.17.0.0/12 -o tailscale0 -j DROP

The DOCKER-USER chain is a nice little escape hatch; it comes before the main auto-generated DOCKER chain, and Docker leaves the rules in it alone.

So I added these rules, and felt pretty good about myself, and then… everything started breaking in weird ways. After some debugging I realised the problem was DNS4. The server’s DNS resolver is 100.100.100.100, a special Tailscale address. This is so that it can resolve tailnet hostnames via MagicDNS (and so I don’t have to configure my custom DNS servers manually on each device; Tailscale does it for me). My new iptables rules inadvertently dropped all the DNS packets coming from docker containers. D’oh.

Obviously the solution here is to double down and add MORE iptables rules:

iptables -I DOCKER-USER -s 192.168.0.0/16 -d 100.100.100.100 -o tailscale0 -j ACCEPT

iptables -I DOCKER-USER -s 172.17.0.0/12 -d 100.100.100.100 -o tailscale0 -j ACCEPT

This got DNS working again, but it all feels a bit gross. Not least because to actually make these persist you need to use iptables-save, and then you also get all the rules that both Docker and Tailscale have inserted. I came across a script to filter out the Docker ones, but… yuck5.

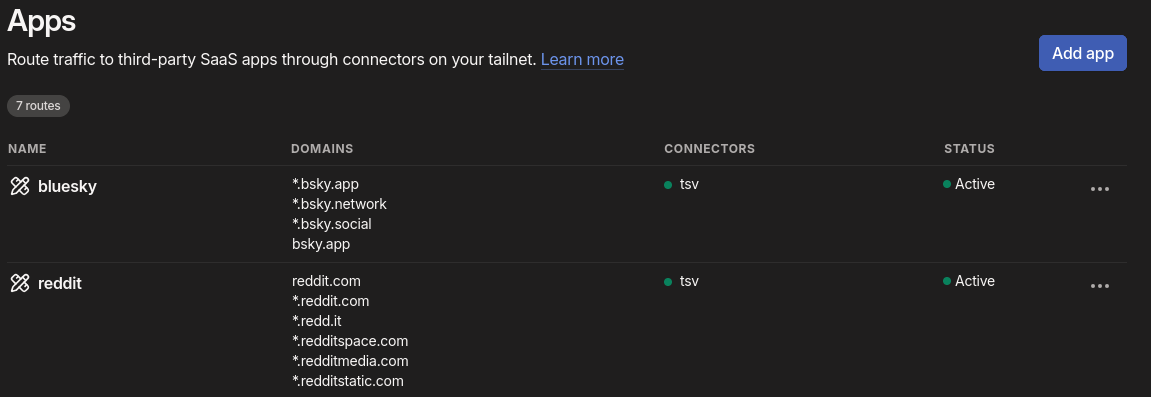

I realised a cleaner idea might just be to lock down what server tagged nodes could access within Tailscale itself. That way I can avoid touching iptables at all. It’s handy to be able to curl services from the host when debugging, but it’s not really necessary. So I removed that access, and… stuff broke again. I’m using my Forgejo instance as a registry for some of the docker images I run, so the docker daemon needs to be able to reach it. I ended up making a new tag for infrastructure, which can be accessed from server devices. This does still allow all the docker containers to reach Forgejo, but I already have it set up with appropriate access controls and public/private repository splits. Forgejo is a service designed to run publicly, so this seems a reasonable trade-off for convenience. I used the tests feature of Tailscale’s ACL config to make sure I’d got the rules right:

{

"tests": [

{

// Servers can only access infrastructure

"src": "tag:server",

"proto": "tcp",

"allow": [

"tag:infrastructure:8080",

],

"deny": [

"100.84.16.43:8080",

"me@example.com:8080",

"tag:server:8080",

"tag:app:8080",

"tag:integration:8080",

],

},

],

}

The whole situation still feels a bit messy. If I ever get around to switching to nftables I might loop back and manually craft some rules for routing traffic, instead of leaving Tailscale and Docker to do their own thing.

-

I discovered later on that logging into the container registry at

forgejo:3000actually issued a redirect tohttps://git.example-net.ts.net/so this was all basically for naught… ↩︎ -

I didn’t use Tailscale’s MagicDNS for a long time just because the word “magic” put me off. Only when I eventually got around to learning how it worked, and seeing that it wasn’t really that magical under the hood, did I change my mind. ↩︎

-

nftables seems far better in lots of ways, but I can’t really be bothered migrating. Maybe next time I reimage the server for whatever reason… ↩︎

-

It’s always DNS… ↩︎

-

Again, nftables would almost certainly help here. It actually has (gasp) configuration files. But again, I really didn’t want to spend the time migrating. ↩︎

Have thoughts that transcend nodding? Send me a message!

Related posts

How I use Tailscale

I’ve been using Tailscale for around four years to connect my disparate devices, servers and apps together. I wanted to talk a bit about how I use it, some cool features you might not know about, and some stumbling blocks I encountered. I’m not sure Tailscale needs an introduction for the likely audience of this blog, but I’ll give one anyway. Tailscale is basically a WireGuard o...

Avoiding the Consequences of Dumb Laws with Tailscale

More and more sites are implementing privacy-invading age checks or just completely blocking the UK thanks to the Online Safety Act. Protecting kids from some content online is certainly a noble goal, but the asinine guidance from Ofcom, threats of absolutely disproportionate fines, and the stupidly broad categories of content have resulted in companies just giving up or going through a tick-box e...

Exposing game servers over Tailscale

I’ve recently been playing a lot of Factorio with a friend. I’ve been hosting, but my desktop computer is behind far too many layers of NAT, and I can’t be bothered dealing with setting up port forwards. Up until today we made do with Steam’s networking support, which in our case ended up relaying the connection via one of their servers. This is amazing as a free, no-hassle...

As I've [written](https://chameth.com/how-i-use-tailscale/) [about](https://chameth.com/avoiding-the-consequences-of-dumb-laws-with-tailscale/) [before](https://chameth.com/exposing-game-servers-over-...